I first learned about AI and bias in 2016 from my Masters student Joy Buolamwini. She was constructing a digital “magic mirror” that would blend the image of her face with that of one of her heroes, Serena Williams. For the project to work, an algorithm needed to identify Joy’s facial features so it could map her eyes, nose and mouth to the computer-generated image of the tennis star.

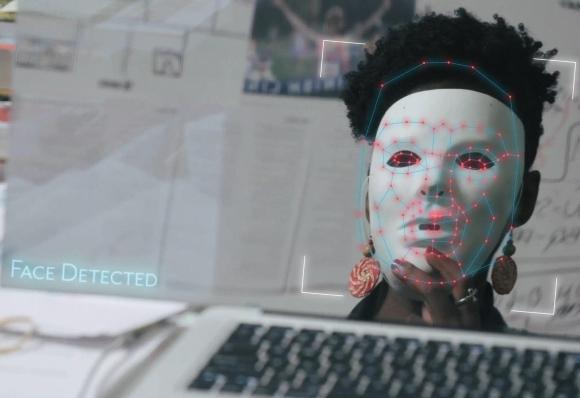

In a video Joy made, the algorithm identified the facial features of her Asian-American colleague immediately. Faced with Joy’s darker face, it failed entirely. Then Joy put on a featureless white mask and the algorithm sprang into action. It was a profound demonstration of how Joy’s real face was entirely invisible to an algorithm that could identify a cartoonishly simple face, so long as it was white.

Buolamwini’s video turned into an influential audit of the racial and gender biases of machine vision systems, a doctoral dissertation and eventually the Algorithmic Justice League, an advocacy organisation focused on fairness in artificial intelligence. And it sent me down the path of considering which technical problems could be solved with better data, and which ones require us to build better societies.

The algorithm Joy was testing was better at detecting lighter faces than darker ones because it had been trained on lots of pale faces and fewer melanin-rich ones. This likely wasn’t a conscious decision: instead, the creators of these algorithms worked with existing data sets like “Labelled Faces in the Wild,” a set of images collected from news articles published online. This set is 78 per cent male and 84 per cent white, reflecting societal biases about what counts as news and who becomes famous. By training facial recognition systems on these data sets, the creators of these algorithms ended up embedding biases about who is important and newsworthy into their system.

The problem Joy identified is fixable: companies can retrain AI models on a richer set of faces, including more women and more people of colour. IBM responded to criticism of biases in its algorithms, in part by building a new dataset using imagery from Bollywood films. In a stunningly problematic attempt at problem-solving, Google allegedly sent contractors to major American cities to capture images of dark-skinned homeless people in exchange for $5 Starbucks gift cards. Provided with better data, these models provide better results on darker faces.

Pioneering tech journalist Julia Angwin led a team that investigated a very different algorithmic bias problem. Broward County, Florida, uses an algorithmic system called Compas to calculate “risk scores” for criminal defendants. The risk score estimates the likelihood that an individual will commit another crime, information judges can use to make decisions about releasing a defendant on bail or issuing a prison sentence.

Angwin’s team compared risk assessments from the system with the actual criminal records of individuals to demonstrate that the system was twice as likely to falsely flag black defendants as future criminals and more likely to mislabel white defendants as low risk.

As with bias in computer vision, the poor performance of the Compas algorithm is a data problem. But it’s a vastly harder problem to solve. The reason Compas predicts that black Americans are more likely to be re-arrested is that black Americans are five times more likely to be arrested for a crime than white Americans. This does not necessarily mean that black Americans are more likely to commit crimes than Americans of other races—black and white Americans have similar rates of drug use, but wildly disparate arrest rates for drug crimes, for example. Compas cannot predict criminal risk fairly because policing in America is so unfair to black Americans.

Some of the most impressive AI systems learn by using a kind of “time travel” in their computations. Mozziyar Etemadi at Northwestern University School of Medicine has developed an AI algorithm that detects lung tumours on CT scans with 94 per cent accuracy, while experienced radiologists detect only 65 per cent accurately. Etemadi’s system is so good because it can look at an early scan of a tumour, taken years before a cancer is detected, and compare it to one years later once the tumour is visible, learning early warning signs of an impending cancer. It doesn’t just get better at classifying images of early stage tumours—it “cheats” by learning from the images of mature ones as well, so it knows which will turn out benign and which are malignant.

The time travel method doesn’t solve the problem of the Compas algorithm. We can look at predictions Compas makes about whether someone is likely to be arrested in the future and collect data about the accuracy of those predictions. Like Angwin, we may see that the system overpredicts the likelihood of black men being arrested. But even if we retrain the system based on this new data, we’re still baking in biases, because black men in America are simply more likely to be arrested for the same crimes as white men.

To fix Compas’s algorithm, you would need to correct the racially-biased data it’s been trained on with a heavy dose of arrest data from US communities that don’t experience racial injustice, as much as improving facial recognition algorithms with more diverse facial data. This leads to a significant challenge for the developers of AI systems. Step one: end racial injustice across communities. Step two: collect data. Step three: retrain your algorithms.

The problems that Buolamwini and Angwin have identified have helped spawn a new branch of AI research called FAccT—Fairness, Accountability and Transparency. FAccT researchers butt up against complex mathematical truths: it is impossible to reduce certain types of AI error without increasing the probability of others. But the problems of embedded bias in AI suggest lessons outside of computer science.

AI systems extrapolate from human behaviour. We teach machines how to translate by looking at books available in, say, Hungarian and English. Hungarian is a gender-neutral language—the pronoun ő can mean “he, she or it.” But when a Hungarian sentence says “ő is a nurse,” most machine translations render “She is a nurse,” whereas “ő is a doctor” is translated as “He is a doctor.” That’s not the conscious decision of a sexist programmer—it’s the product of hundreds of years of assuming that women are nurses and men are doctors. Thanks to pressure from FAccT researchers, companies like Google are fixing their algorithms to be less sexist. But maybe the most important work FAccT researchers can do is show us what social biases get encoded into our models from types of discrimination that are so pervasive that we’ve simply learned to live with them.

Companies around the world are replacing expensive, error-prone humans with AI systems. At their best, these systems outperform the best-trained humans. But often they fail in consistent ways that reveal the sexism, racism and other systemic biases of our society. We should work to ensure these biased systems don’t unfairly deny bail to black defendants. But we should work even harder to create a society where black people are not arrested at unfairly high rates.

AI systems calcify systemic biases into code. That’s why it’s so important to interrogate them and prevent biased algorithms from harming people who are ill-equipped to challenge the opaque, apparently oracular decisions made by computer algorithms.

But the problems AI has with bias can serve as a roadmap out of some of society’s most pernicious and ingrained problems. What if our response to bias in AI wasn’t just to fix the computers, but the society that trains them?