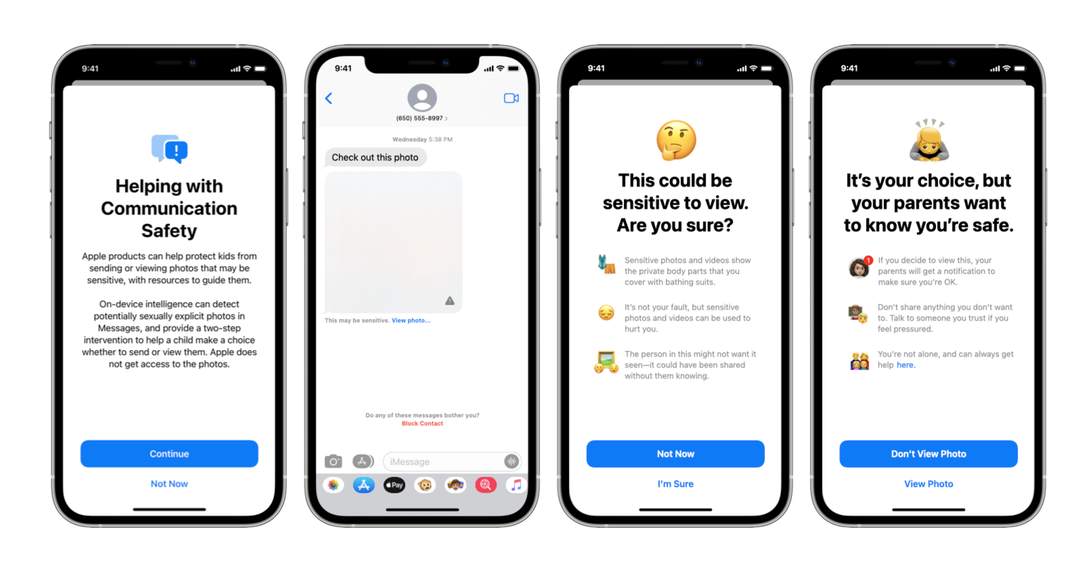

Apple's alert if a child sends or receives a sexually explicit image on the Messages app.

Apple

Apple for years has focused on adding new programs to its phones, all designed to make life easier. Its systems scan emails for new calendar appointments, and its Siri voice assistant suggests to call friends on their birthdays. But Apple's latest feature is focused on abuse.

The tech giant said

in a new section of its website

published Thursday that it plans to add scanning software to its iPhones, iPads, Mac computers and Apple Watches when the new iOS 15, iPad OS 15, MacOS Monterey and WatchOS 8 operating systems all launch in the fall. The new program, which Apple said is designed to "limit the spread of child sexual abuse material" is part of a new collaboration between the company and child safety experts.

Get the CNET Smart Home and Appliances newsletter

Modernize your home with the latest news on smart home products and trends. Delivered Tuesdays and Thursdays.

Apple said it'll update Siri and search features to provide parents and children with information to help them seek support in "unsafe situations." The program will also "intervene" when users try to search for child abuse-related topics. Apple will also warn parents and children when they might be sending or receiving a sexually explicit photo using its Messages app, either by hiding the photo behind a warning that it may be "sensitive" or adding an informational pop-up.

But the most dramatic effort, Apple said, is to identify child sexual abuse materials on the devices themselves, with a new technology that'll detect these images in Apple's photos app with the help of databases provided by the National Center for Missing and Exploited Children. Apple said the system is automated and is "designed with user privacy in mind," with the system performing scans on the device before images are backed up to iCloud. If the program is convinced it's identified abusive imagery, it can share those photos with representatives from Apple, who'll act from there. The Financial Times

earlier reported Apple's plans

.

While some industry watchers applauded Apple's efforts to take on child exploitation, they also worried that the tech giant might be creating a system that could be abused by totalitarian regimes. Other technology certainly has been abused, most recently software from Israeli firm

NSO Group

, which makes government surveillance tech. Its Pegasus spyware, touted as a tool to fight criminals and terrorists, was reportedly used to aim

hacks at 50,000 phone numbers

connected to activists, government leaders, journalists, lawyers and teachers around the globe.

"Even if you believe Apple won't allow these tools to be misused there's still a lot to be concerned about," tweeted Matthew Green, a professor at Johns Hopkins University who's worked on cryptographic technologies.

Apple didn't immediately respond to a request for comment.

To be sure, other tech companies have been scanning photos for years. Facebook and Twitter both have worked with the National Center for Missing and Exploited Children and other organizations to

root out child sexual abuse imagery

on

their social networks

. Microsoft and Google, meanwhile, use similar technology to identify these photos in emails and search results.

What's different with Apple, critics say, is that it's scanning images on the device, rather than after they've been uploaded to the internet.