Data modeling comprises the methodologies of creating data representations for data visualization, which allows users to better understand the global values and associations that create the data’s potential underlying value.

Data modeling is used to define and analyze the data requirements to support data mining and data analytics. The data modeling process involves professional data modelers working closely with business stakeholders as well as potential users of a system.

In this article, we discuss the data model, types of data models, data modeling techniques, and examples.

Also see: Best Data Modeling Tools

A data model is a visual representation of data elements and the relations between them. It is the fundamental method used to leverage abstraction in an information system. Data models define the logical structure of data, how they are connected and how the data are processed and stored in information systems.

Data models provide the conceptual tools for describing the model of an information system at the data abstraction level. It enables users to decide how data will be stored, leveraged, updated, accessed, and shared across an organization.

Data models may also provide a portrait of the final system, and how it will look after implementation. It helps in the development of effective information systems by supporting the definition and structure of data on behalf of relevant business processes. It facilitates the communication of business and technical needs for the development of an action plan.

Earlier data models could be “flat data models,” in which data was displayed in the same plane and was therefore limited; flat models could introduce duplications and anomalies. Now, data models are more likely 3-D, and are extremely effective and useful to the development of business and IT strategy.

Also see: Top Data Visualization Tools

The ANSI/X3/SPARC Standards Planning and Requirements Committee described a three-schema concept, which was first introduced in 1975. Those three kinds of data-model instances are conceptual schema, logical schema, and physical schema.

Also see: What is Data Mining?

Conceptual Schema

A conceptual data model or conceptual schema is a high-level description of information used in developing an information system, such as database structures. It is a map of concepts and the relationships between them, typically including only the main concepts and the main relationships.

The conceptual schema describes the semantics of an organization and represents a series of assertions. It may exist on various levels of abstraction and hides the internal details of physical storage structures and instead focuses on describing entities, data types, relationships, and constraints. The conceptual schema design process takes information requirements for an application as input and produces a schema that is expressed in a form of conceptual modeling notation. Below is an example of a conceptual schema:

Logical Schema

A logical data model or logical schema is a representation of the abstract structure of the information domain that defines all the logical constraints applied to the data stored. A specific problem domain expresses information system management or storage technology independently and defines views, tables, and integrity constraints. A logical schema defines the design of the information system at its logical level.

Software developers, as well as administrators, tend to work at this level. Although the data can be described as data records that are stored in the form of data structures, the data structure implementation and other internal details are hidden at this level. Below is an example of a logical schema:

Physical Schema

A physical data model or physical schema is a representation of an implementation design; it defines data abstraction within physical parameters.

A complete physical schema includes all the information system artifacts required to achieve performance goals or create relationships between data, such as indexes, linking tables, and constraint definitions. Analysts can use a physical schema to calculate storage estimates, and this may include specific storage allocation details for an information system.

Also see: What is Data Analytics?

There are various techniques to achieve data modeling successfully, though the basic concepts remain the same across techniques. Some popular data modeling techniques include Hierarchical, Relational, Network, Entity-relationship, and Object-oriented.

Hierarchical Technique

The Hierarchical data modeling technique follows a tree-like structure where its nodes are sorted in a particular order. A hierarchy is an arrangement of items represented as “above,” “below,” or “at the same level as” each other. Hierarchical data modeling technique was implemented in the IBM Information Management System (IMS) and was introduced in 1966.

It was a popular concept in a wide variety of fields, including computer science, mathematics, design, architecture, systematic biology, philosophy, and social sciences. But it is rarely used now due to the difficulties of retrieving and accessing data.

Relational Technique

The relational data modeling technique is used to describe different relationships between entities, which reduces the complexity and provides a clear overview. The relational model was first proposed as an alternative to the hierarchical model by IBM researcher Edgar F. Codd in 1969. It has four different sets of relations between the entities: one to one, one to many, many to one, and many to many.

Network Technique

The network data modeling technique is a flexible way to represent objects and underlying relationships between entities, where the objects are represented inside nodes and the relationships between the nodes is illustrated as an edge. It was inspired by the hierarchical technique and was originally introduced by Charles Bachman in 1969.

The network data modeling technique makes it easier to convey complex relationships as records and can be linked to multiple parent records.

Entity-relationship technique

The entity-relationship (ER) data modeling technique represents entities and relationships between them in a graphical format consisting of Entities, Attributes, and Relationships. The entities can be anything, such as an object, a concept, or a piece of data. The entity-relationship data modeling technique was developed for databases and introduced by Peter Chen in 1976. It is a high-level relational model that is used to define data elements and relationships in a sophisticated information system.

Object-Oriented Technique

The object-oriented data modeling technique is a construction of objects based on real-life scenarios, which are represented as objects. The object-oriented methodologies were introduced in the early 1990s’ and were inspired by a large group of leading data scientists.

It is a collection of objects that contain stored values, in which the values are nothing but objects. The objects have similar functionalities and are linked to other objects.

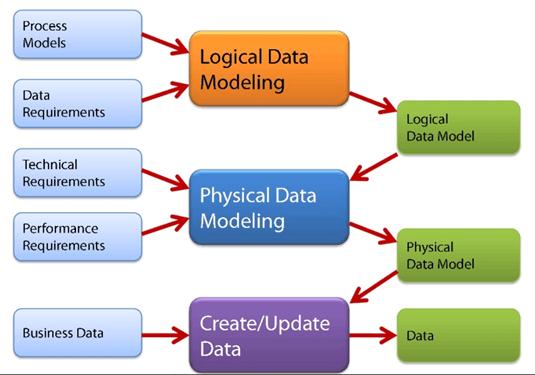

Data Modeling: An Integrated View

Data modeling is an essential technology for understanding relationships between data sets. The integrated view of conceptual, logical, and physical data models helps users to understand the information and ensure the right information is used across an entire enterprise.

Although data modeling can take time to perform effectively, it can save significant time and money by identifying errors before they occur. Sometimes a small change in structure may require modification of an entire application.

Some information systems, such as a navigational system, use complex application development and management that requires advanced data modeling skills. There are many open source Computer-Aided Software Engineering (CASE) as well as commercial solutions that are widely used for this data modeling purpose.

Also see: Guide to Data Pipelines