Getting the data right is the first step in any AI or machine learning project -- and it's often more time-consuming and complex than crafting the machine learning algorithms themselves. Advanced planning to help streamline and improve data preparation in machine learning can save considerable work down the road. It can also lead to more accurate and adaptable algorithms.

"Data preparation is the action of gathering the data you need, massaging it into a format that's computer-readable and understandable, and asking hard questions of it to check it for completeness and bias," said Eli Finkelshteyn, founder and CEO of Constructor.io, which makes an AI-driven search engine for product websites.

It's tempting to focus only on the data itself, but it's a good idea to first consider the problem you're trying to solve. That can help simplify considerations about what kind of data to gather, how to ensure it fits the intended purpose and how to transform it into the appropriate format for a specific type of algorithm.

Good data preparation can lead to more accurate and efficient algorithms, while making it easier to pivot to new analytics problems, adapt when model accuracy drifts and save data scientists and business users considerable time and effort down the line.

The importance of data preparation in machine learning

"Being a great data scientist is like being a great chef," surmised Donncha Carroll, a partner at consultancy Axiom Consulting Partners. "To create an exceptional meal, you must build a detailed understanding of each ingredient and think through how they'll complement one another to produce a balanced and memorable dish. For a data scientist, this process of discovery creates the knowledge needed to understand more complex relationships, what matters and what doesn't, and how to tailor the data preparation approach necessary to lay the groundwork for a great ML model."

Managers need to appreciate the ways in which data shapes machine learning application development differently compared to customary application development. "Unlike traditional rule-based programming, machine learning consists of two parts that make up the final executable algorithm -- the ML algorithm itself and the data to learn from," explained Felix Wick, corporate vice president of data science at supply chain management platform provider Blue Yonder. "But raw data are often not ready to be used in ML models. So, data preparation is at the heart of ML."

Data preparation consists of several steps, which consume more time than other aspects of machine learning application development. A 2021 study by data science platform vendor Anaconda found that data scientists spend an average of 22% of their time on data preparation, which is more than the average time spent on other tasks like deploying models, model training and creating data visualizations.

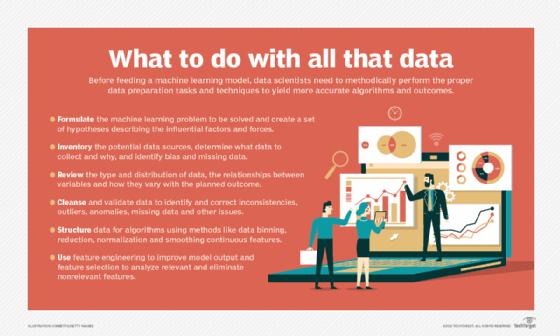

Although it is a time-intensive process, data scientists must pay attention to various considerations when preparing data for machine learning. Following are six key steps that are part of the process.

1. Problem formulation

Data preparation for building machine learning models is a lot more than just cleaning and structuring data. In many cases, it's helpful to begin by stepping back from the data to think about the underlying problem you're trying to solve. "To build a successful ML model," Carroll advised, "you must develop a detailed understanding of the problem to inform what you do and how you do it."

Start by spending time with the people that operate within the domain and have a good understanding of the problem space, synthesizing what you learn through conversations with them and using your experience to create a set of hypotheses that describes the factors and forces involved. This simple step is often skipped or underinvested in, Carroll noted, even though it can make a significant difference in deciding what data to capture. It can also provide useful guidance on how the data should be transformed and prepared for the machine learning model.

An Axiom legal client, for example, wanted to know how different elements of service delivery impact account retention and growth. Carroll's team collaborated with the attorneys to develop a hypothesis that accounts served by legal professionals experienced in their industry tend to be happier and continue as clients longer. To provide that information as an input to a machine learning model, they looked back over the course of each professional's career and used billing data to determine how much time they spent serving clients in that industry.

"Ultimately," Carroll added, "it became one of the most important predictors of client retention and something we would never have calculated without spending the time upfront to understand what matters and how it matters."

2. Data collection and discovery

Once a data science team has formulated the machine learning problem to be solved, it needs to inventory potential data sources within the enterprise and from external third parties. The data collection process must consider not only what the data is purported to represent, but also why it was collected and what it might mean, particularly when used in a different context. It's also essential to consider factors that may have biased the data.

"To reduce and mitigate bias in machine learning models," said Sophia Yang, a senior data scientist at Anaconda, "data scientists need to ask themselves where and how the data was collected to determine if there were significant biases that might have been captured." To train a machine learning model that predicts customer behavior, for example, look at the data and ensure the data set was collected from diverse people, geographical areas and perspectives.

"The most important step often missed in data preparation for machine learning is asking critical questions of data that otherwise looks technically correct," Finkelshteyn said. In addition to investigating bias, he recommended determining if there's reason to believe that important missing data may lead to a partial picture of the analysis being done. In some cases, analytics teams use data that works technically but produces inaccurate or incomplete results, and people who use the resulting models build on these faulty learnings without knowing something is wrong.

3. Data exploration

Data scientists need to fully understand the data they're working with early in the process to cultivate insights into its meaning and applicability. "A common mistake is to launch into model building without taking the time to really understand the data you've wrangled," Carroll said.

Data exploration means reviewing such things as the type and distribution of data contained within each variable, the relationships between variables and how they vary relative to the outcome you're predicting or interested in achieving.

This step can highlight problems like collinearity -- variables that move together -- or situations where standardization of data sets and other data transformations are necessary. It can also surface opportunities to improve model performance, like reducing the dimensionality of a data set.

Data visualizations can also help improve this process. "This might seem like an added step that isn't needed," Yang conjectured, "but our brains are great at spotting patterns along with data that doesn't match the pattern." Data scientists can easily see trends and explore the data correctly by creating suitable visualizations before drawing conclusions. Popular data visualization tools include Tableau, Microsoft Power BI, D3.js and Python libraries such as Matplotlib, Bokeh and the HoloViz stack.

4. Data cleansing and validation

Various data cleansing and validation techniques can help analytics teams identify and rectify inconsistencies, outliers, anomalies, missing data and other issues. Missing data values, for example, can often be addressed with imputation tools that fill empty fields with statistically relevant substitutes.

But Blue Yonder's Wick cautioned that semantic meaning is an often overlooked aspect of missing data. In many cases, creating a dedicated category for capturing the significance of missing values can help. In others, teams may consider explicitly setting missing values as neutral to minimize their impact on machine learning models.

A wide range of commercial and open source tools can be used to cleanse and validate data for machine learning and ensure good quality data. Open source technologies such as Great Expectations and Pandera, for example, are designed to validate the data frames commonly used to organize analytics data into two-dimensional tables. Tools that validate code and data processing workflows are also available. One of them is pytest, which, Yang said, data scientists can use to apply a software development unit-test mindset and manually write tests of their workflows.

5. Data structuring

Once data science teams are satisfied with their data, they need to consider the machine learning algorithms being used. Most algorithms, for example, work better when data is broken into categories, such as age ranges, rather than left as raw numbers.

Two often-missed data preprocessing tricks, Wick said, are data binning and smoothing continuous features. These data regularization methods can reduce a machine learning model's variance by preventing it from being misled by minor statistical fluctuations in a data set.

Binning data into different groups can be done either in an equidistant manner, with the same "width" for each bin, or equi-statistical method, with approximately the same number of samples in each bin. It can also serve as a prerequisite for local optimization of the data in each bin to help produce low-bias machine learning models.

Smoothing continuous features can help in "denoising" raw data. It can also be used to impose causal assumptions about the data-generating process by representing relationships in ordered data sets as monotonic functions that preserve the order among data elements.

Other actions that data scientists often take in structuring data for machine learning include the following:

6. Feature engineering and selection

The last stage in data preparation before developing a machine learning model is feature engineering and feature selection.

Wick said feature engineering, which involves adding or creating new variables to improve a model's output, is the main craft of data scientists and comes in various forms. Examples include extracting the days of the week or other variables from a data set, decomposing variables into separate features, aggregating variables and transforming features based on probability distributions.

Data scientists also must address feature selection -- choosing relevant features to analyze and eliminating nonrelevant ones. Many features may look promising but lead to problems like extended model training and overfitting, which limits a model's ability to accurately analyze new data. Methods such as lasso regression and automatic relevance determination can help with feature selection.