Apple announced new features Thursday that will scan iPhone and iPad users' photos to detect and report large collections of child sexual abuse images stored on its cloud servers.

"We want to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM)," Apple said in a statement.

"This program is ambitious, and protecting children is an important responsibility," it said. "Our efforts will evolve and expand over time."

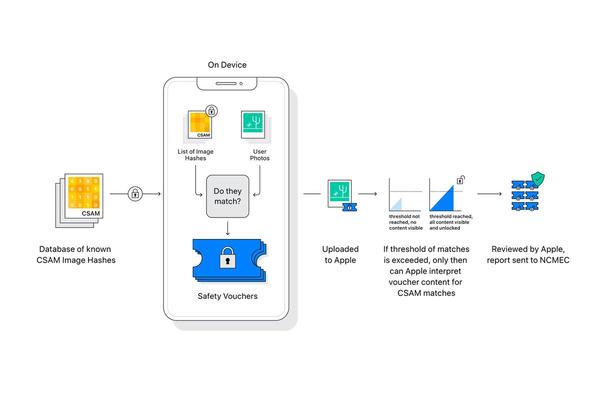

Apple said the detection work happens on the device and insisted that only people who have large collections of images uploaded to iCloud that match a database of known child sexual abuse material would cross a threshold that enables some of their photos to be decrypted and analyzed by Apple.

Noting that possessing CSAM "is an illegal act," Apple's chief privacy officer, Erik Neuenschwander, said on a media call Thursday that "the system was designed and trained" to detect images from the National Center for Missing & Exploited Children's CSAM database.

Neuenschwander said that people whose images are flagged to law enforcement would not be notified and that their accounts would be disabled.

"The fact that your account is disabled is a noticeable act to you," Neuenschwander said.

Apple said its suite of mobile device operating systems will be updated with the new child safety feature "later this year."

John Clark, president & CEO of the National Center for Missing & Exploited Children, said: "Apple's expanded protection for children is a game-changer. With so many people using Apple products, these new safety measures have lifesaving potential for children who are being enticed online and whose horrific images are being circulated in child sexual abuse material."

Some privacy experts expressed concern.

“There are some problems with these systems,” said Matthew D. Green, an associate professor of cryptography at John Hopkins University. “The first is that you have to trust Apple that they're only scanning for legitimately bad content. If the FBI or Chinese government lean on Apple and ask them to add specific images to their database, there will be no way for you (the user) to detect this. You'll just get reported for having those photos. In fact, the system design makes it almost impossible to know what's in that database if you don't trust Apple.”

As part of the update, Apple's Messages app will also use "on-device machine learning to analyze image attachments and determine if a photo is sexually explicit" for minors whose devices use parental control features.

The Messages app will intervene if the computer believes the minor may be sending or receiving an image or a video showing "the private body parts that you cover with bathing suits," according to slides provided by Apple.

The Messages app will add tools to warn children and their parents when sexually explicit photos are received or sent.

Apple

"When a child receives this type of content the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo," Apple said in a news release.

Informational screenshots show an iPhone stopping kids from sending sensitive images with a warning that "sensitive photos and videos can be used to hurt you" and warning that the "person in this might not want it seen."

"As an additional precaution the child can also be told that, to make sure they are safe, their parents will get a message if they do view it. Similar protections are available if a child attempts to send sexually explicit photos. The child will be warned before the photo is sent and the parents can receive a message if the child chooses to send it."