In the past few years, facial recognition technology has become a critical tool for law enforcement: the FBI has used it to help identify suspects who stormed the Capitol on January 6 and state and local police say it's been instrumental in cases of robberies, assaults, and murders. Facial recognition software uses complex mathematical algorithms or instructions to compare a picture of a suspect's face to potentially millions of others in a database. But it's not just mugshots the software may be searching through. If you have a driver's license, there's a good chance your picture might have been searched, even if you've never committed a crime in your life.

In January 2020, Robert Williams arrived home from work to find two Detroit police officers waiting for him outside his house in the quiet suburb of Farmington Hills, Michigan.

They had a warrant for his arrest.

He'd never been in trouble with the law before and had no idea what he was being accused of.

Robert Williams: I'm like, "You can't just show up to my house and tell me I'm under arrest."

Robert Williams: The cop gets a piece of paper and it says, "Felony larceny," on it. I'm like, "Bro, I didn't steal nothin'. I'm like, "Y'all got the wrong person."

Williams, who is 43 and now recovering from a stroke, was handcuffed in front of his wife, Melissa, and their two daughters.

Melissa Williams: We thought it was maybe a mistaken identity. Like, did someone use his name?

He was brought to this detention center and locked in a cell overnight. Police believed Williams was this man in the red hat, recorded in a Detroit store in 2018 stealing $3,800 worth of watches.

When Detroit detectives finally questioned Williams more than 12 hours after his arrest, they showed him some photos - including from the store security camera.

Robert Williams: So, he turns over the paper and he's like, "So, that's not you?" And I looked at it, picked it up and held it up to my face and I said, "I hope y'all don't think all Black people look alike." At this point, I'm upset. Like, bro, why am I even here? He's like, "So, I guess the computer got it wrong."

Anderson Cooper: The-- police officer said, "The computer got it wrong"?

Robert Williams: Yeah, the computer got it wrong.

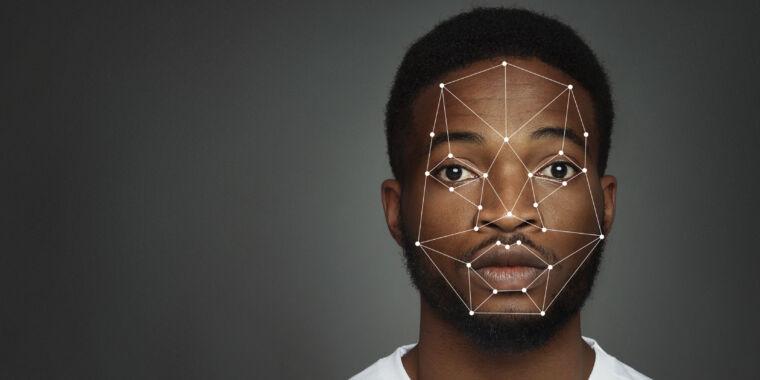

Williams didn't know the computer they were referring to was a facial recognition program. Detroit police showed us how their system works. First, a photo of an unknown suspect is run against a database of hundreds of thousands of mugshots, which we've blurred for this demonstration.

James Craig: It's an electronic mug book, if you will.

James Craig has been Detroit's chief of police since 2013.

James Craig: Once we insert a photograph, a probe photo-- into the software-- the computer may generate 100 probables. And then they rank these photographs in order of what the computer suggests or the software suggests is the most likely suspect.

Anderson Cooper: The software ranks it in order of-- of most likely suspect to least likely?

James Craig: Absolutely.

It's then up to an analyst to compare each of those possible matches to the suspect and decide whether any of them should be investigated further.

In the Robert Williams case, Detroit police say they didn't have an analyst on duty that day, so they asked Michigan state police to run this photo through their system which had a database of nearly 50 million faces taken from mug shots but also state IDs and driver's licenses. This old drivers' license photo of Robert Williams popped up. We learned it was ranked 9th among 243 possible matches. An analyst then sent it to Detroit police as an investigative lead only, not probable cause to arrest.

Anderson Cooper: What happened in the case of Robert Williams? What went wrong?

James Craig: Sloppy, sloppy investigative work.

Sloppy, Chief Craig says because the detective in Detroit did little other investigative work before getting a warrant to arrest Williams.

James Craig: The response by this administration-- that detective was disciplined. And, subsequently, a commanding officer of that command has been de-appointed. But it wasn't facial recognition that failed. What failed was a horrible investigation.

Two weeks after he was arrested, the charges against Robert Williams were dismissed.

Anderson Cooper: The police in Detroit say that the Williams case was in large part a result of sloppy detective work. Do you think that's true?

Clare Garvie: That's part of the story, for sure. But face recognition's also part of the story.

Clare Garvie, a lawyer at Georgetown's Center on Privacy and Technology, estimates facial recognition has been involved in hundreds of thousands of cases.

She's been tracking its use by police for years. Legislators, law enforcement and software developers have all sought out Garvie's input on the topic.

Clare Garvie: Because it's a machine, because it's math that does the face recognition match in these systems, we're giving it too much credence. We're saying, "It's math, therefore, it must be right."

Anderson Cooper: "The computer must be right"?

Clare Garvie: Exactly. When we want to agree with the computer, we are gonna go to find evidence that agrees with it

But Garvie says the computer is only as good as the software it runs on.

Clare Garvie: There are some good algorithms; there are some terrible algorithms, and everything in between.

Patrick Grother has examined most of them. He is a computer scientist at a little-known government agency called the National Institute of Standards and Technology.

Every year, more than a hundred facial recognition developers around the world send his lab prototypes to test for accuracy.

Anderson Cooper: Are computers good at recognizing faces?

Patrick Grother: They're very good. I-- they're-- they're better than humans today.

Anderson Cooper: Are these algorithms flawless?

Patrick Grother: By no means.

Software developers train facial recognition algorithms by showing them huge numbers of human faces so they can learn how to spot similarities and differences, but they don't work the way you might expect.

Anderson Cooper: We've all seen in movies computers that-- form a map of the face. Is that what's happening with facial recognition?

Patrick Grother: I mean, historically, yes. But nowadays that is not the-- the approach.

Anderson Cooper: So it's not taking your nose and comparing it to noses in its database, or and then taking eyes and comparing it to eyes.

Patrick Grother: Nothing so explicit. It could be looking at eyebrows or eyes or nose or lips. It could be looking at-- skin texture. So if we have 20 photos of Anderson Cooper what is consistent? It's trying to uncover what makes Anderson Cooper, Anderson Cooper.

A year and a half ago, Grother and his team published a landmark study which found that many facial recognition algorithms had a harder time making out differences in Black, Asian, and female faces.

Patrick Grother: They make mistakes-- false negative errors, where they don't find the correct face, and false positive errors, where they find somebody else. In a criminal justice context, it could lead to a -- you know an incorrect arrest.

Anderson Cooper: Why would race or gender of a person lead to misidentification?

Patrick Grother: The algorithm has been built on a finite number of photos that may or may not be demographically balanced.

Anderson Cooper: Might not have enough females, enough Asian people, enough Black people among the photos that it's using to teach the algorithm.

Patrick Grother: Yeah, to teach the algorithm how to-- do identity.

Clare Garvie points out the potential for mistakes makes it all the more important how police use the information generated by the software.

Anderson Cooper: Is facial recognition being misused by police?

Clare Garvie: In the absence of rules, what-- I mean, what's misuse? There are very few rules in most places around how the technology's used.

It turns out there aren't well-established national guidelines and it's up to states, cities and local law enforcement agencies to decide how they use the technology: who can run facial recognition searches, what kind of formal training, if any, is needed, and what kind of images can be used in a search.

In a 2019 report, Garvie found surprising practices at some police departments, including the editing of suspects' facial features before running the photos in a search.

Clare Garvie: Most photos that police are-- are dealing with understandably are partially obscured. Police departments say, "No worries. Just cut and paste someone else's features, someone else's chin and mouth into that photo before submitting it."

Anderson Cooper: But, that's, like, half of somebody's face.

Clare Garvie: I agree. If we think about this, face recognition is considered a biometric, like fingerprinting. It's unique to the individual. So how can you swap out people's eyes and expect to still get a good result?

Detroit's police chief, James Craig, says they don't allow that kind of editing with their facial recognition system, which cost an estimated $1.8 million and has been in use since 2017. He says it's become a crucial tool in combating one of the highest violent crime rates in the country and was used in 117 investigations last year alone.

James Craig: We understand that the-- the software is not perfect. We know that.

Anderson Cooper: It's gotta be tempting for some officers to use it as more than just a lead.

James Craig: Well, as I like to always say to my staff, the end never justifies the means.

After Robert Williams, another alleged wrongful arrest by Detroit police came to light. Chief Craig says they've put in place stricter policies limiting the use of facial recognition to violent crime.

Requiring police to disclose its use to prosecutors when seeking a warrant and adding new layers of oversight.

James Craig: So analyst number one will go through the m-- methodical work of trying to identify the suspect. A second analyst has to go through the same level of rigor. The last step is if the supervisor concurs, now that person can be used as a lead. A lead only. A suspect cannot be arrested alone and charged alone based on the recognition.

But there are police departments like this one in Woodbridge, New Jersey, where it's unclear what rules are in place. In February 2019, police in Woodbridge arrested 33-year-old Nijeer Parks.

Anderson Cooper: Did they tell you why they thought it was you?

Nijeer Parks: Pretty much just facial recognition. When I asked 'em like, "Well, how did you come to get to me?" Like, "The-- like, the computer brung you up. The computer's not just gonna lie."

Police said a suspect allegedly shoplifted from this Hampton Inn and nearly hit an officer with a car while escaping the scene. They ran this driver's license the suspect gave them through a facial recognition program. It's not clear if this is the perpetrator since law enforcement said it's a fake ID. According to police reports, the search returned, "a high-profile comparison to Nijeer Parks." He spent more than a week in jail before he was released to fight his case in court.

Nijeer Parks: I think they looked at my background, they pretty much figured, like, "He had the jacket, we got 'em. He ain't-- he's not gonna fight it."

Anderson Cooper: When you say you had a jacket, you had prior convictions.

Nijeer Parks: Yes.

Anderson Cooper: What prior convictions do you have?

Nijeer Parks: I've been convicted for selling drugs, I've been in prison twice for it. But I've been home since 2016, had a job nonstop the whole time.

Facing 20 years in jail, Parks says he considered taking a plea deal.

Nijeer Parks: I knew I didn't do it, but it's like, I got a chance to be home, spending more time with my son, or I got a chance to come home, and he's a grown man and might have his own son.

Anderson Cooper: 'Cause I think most people think, "Well, if I didn't commit a crime, there's no way I would accept a plea deal."

Nijeer Parks: You would say that until you're sittin' right there.

After eight months, prosecutors failed to produce any evidence in court linking Parks to the crime, and in october 2019, the case was dismissed. Parks is now the third Black man since last summer to come forward and sue for wrongful arrest involving facial recognition. Woodbridge police and the prosecutor involved in Parks' case declined to speak with us. A spokesman for the town told us that they've denied Parks' civil claims in court filings.

Anderson Cooper: If this has been used hundreds of thousands of times as leads in investigations, and you can only point to three arrests based on misidentification by this technology, in the balance is that so bad?

Clare Garvie: The fact that we only know of three misidentifications is more a product of how little we know about the technology than how accurate it is.

Anderson Cooper: Do you think there're more?

Clare Garvie: Yes. I have every reason to believe there are more. And this is why the person who's accused almost never finds out that it was used.

Robert Williams says he only found out it was used after detectives told him the computer got it wrong. He's sued with the help of the ACLU. The city of Detroit has denied any legal responsibility and told us they hope to settle.

While the use of facial recognition technology by police departments continues to grow, so do calls for greater oversight. Within the last two years, one city and one state have put a moratorium on the technology and 19 cities have banned it outright.

Produced by Nichole Marks and David M. Levine. Associate producer, Annabelle Hanflig. Edited by Joe Schanzer.

Anderson CooperAnderson Cooper, anchor of CNN's "Anderson Cooper 360," has contributed to 60 Minutes since 2006. His exceptional reporting on big news events has earned Cooper a reputation as one of television's pre-eminent newsmen.