Since the outbreak of the coronavirus pandemic, our news station’s goal has been to deliver the most accurate, truthful and up-to-date information about COVID-19 as possible.

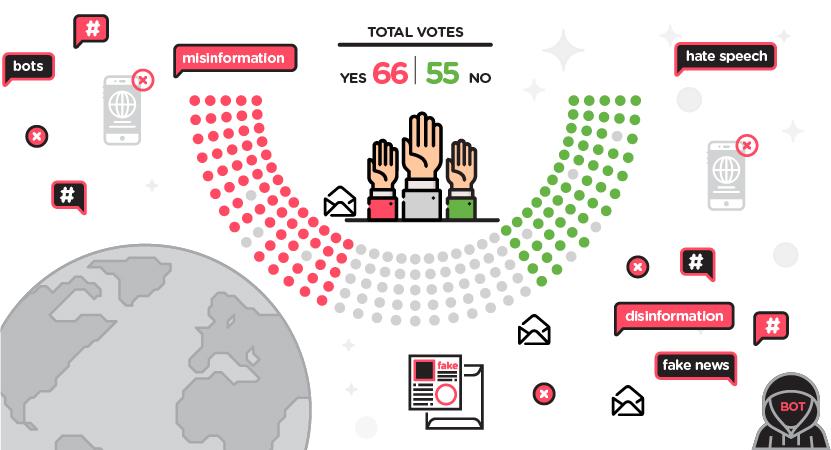

While we feel we have accomplished that goal as well as any news outlet could in these times, we have also seen the rise of misinformation about COVID-19 and the vaccines coming not only from less-reputable sources, but on our own social media platforms, in the comments and replies laden with conspiracies, misrepresentations, and outright lies about this global health crisis.

From the 'Information Age' to the 'Affirmation Age'

The mid-20th Century is generally considered the beginning of the “Information Age,” with the development of the transistor to the rise of the personal computer in the 1980s and 90s to the ubiquitous adoption of the internet by the year 2000.

“We’re not in an age of information anymore,” says Dr. Michael Bugeja, a professor of media and ethics at the Greenlee School of Journalism at Iowa State University. “People do not want information anymore in a sectarian society. They want affirmation. And if you're not affirming with your factual news coverage, what the situation should be, they're just going to go to another site that does affirm them.”

The ‘Infodemic’

Misinformation about COVID-19 and the vaccines is such a prevalent topic that scholars around the world have labeled the phenomenon the “Infodemic.”

“The proliferation of false and misleading claims about COVID-19 vaccines is a major challenge to public health authorities around the world, as such claims sow uncertainty, contribute to vaccine hesitancy and generally undermine the efforts to vaccinate as many people as possible,” state the authors of “Studying the COVID-19 infodemic at scale,” published on June 10, 2021.

A study and scholarly article from May 2021 from the Observatory on Social Media at the University of Indiana found that across Facebook, Twitter and YouTube, “low-credibility content,” as a whole, has a higher prevalence than content from any single high-credibility source.

The problem is compounded on Facebook, the study’s authors assert, because more low-credibility information is dispersed by “high-status” accounts; verified accounts yield almost 20% of original posts and 70% of reshares of low-credibility content there. These accounts are dubbed “infodemic superspreaders.”

The study found that the ratio of low to high-credibility information is lower on Facebook than Twitter, but the impact of low-credibility content on Facebook may be higher, as 69% of adults in the U.S. use Facebook versus 22% who use Twitter. Facebook is a pathway to consuming online news for about 43% of U.S. adults, versus 12% who get their news from Twitter, according to the study.

Indeed, our news website’s own metrics back up those figures. In 2021, 33.3% of traffic to News5Cleveland.com came from social media, and of that, 93% of those sessions came from Facebook.

Are we aiding and abetting the Infodemic?

While the Indiana University study was focused on less-than-reputable primary sources that publish original posts and tweets containing misinformation, over the past two years, our news station has seen a marked increase in misinformation spread on our own Facebook page – not by us, but by the comments and replies to the factual news content we publish.

A brief analysis of posts on our page suggests that the volume of misinformation coming from those who interact with our social media platforms isn’t slowing down, even as more hard data and factual analysis of COVID-19 and vaccines becomes available.

On an Oct. 26, 2020, Facebook post marking the 200,000th COVID-19 case in Ohio, there were over 232 top-level comments, not counting replies to comments. Of those, at least 20 comments could be considered untrue statements. It could be argued that many, many more could be considered misinformation – for example, comments questioning the veracity of the data, or simply calling our news story a lie. However, about 10% of the comments on the post were verifiably false.

Over one year later, on November 16, 2021, in another post about the rise in COVID cases and the low vaccination rates in Ohio, roughly the same ratio of misinformation comments exists: 13 of the 115 comments were verifiably false.

Some of the comments that we considered verifiably false include:

While that doesn’t seem like an overwhelming number of falsehoods, consider the fact that our news station has one opportunity to present what we consider the facts, whereas Facebook users have a literally limitless platform to spout as many mistruths as they desire.

What have we done about it?

As a reputable news station and a champion of our community, we, of course, abhor the misinformation that is spread daily on our social media platforms, where a significant portion of our audience digests our content.

Both behind the scenes and in full view of our audience, we have employed three basic strategies in combating the misinformation spread in our purview: ignore it, refute it, or remove it.

In the earliest stages of the pandemic, we generally ignored all but the most egregious mistruths about COVID-19. To be sure, the novel coronavirus was still very much novel, and there were varying viewpoints and conflicting data among even the most venerated organizations studying the disease and its effects.

Add to that the fact that we were and continue to be advocates and supplicants of the rights enshrined in the First Amendment - those of free speech and a free press. When we intercede to counter or remove statements made by our viewers, it is only in the most dire of circumstances - generally when threats, slander, and unsubstantiated allegations are involved.

And in the past, and even largely during the pandemic, our audience would often police itself. When one viewer commented with a piece of misinformation, oftentimes many more would reply to refute it, and there would be a spirited debate on the topic. At times perhaps too spirited, certainly, but overall, our viewers are civil and courteous, even in times of disagreement.

As the pandemic continued, and as the volume and falsity of misinformation continued to increase, we began to take a stand against it in a fashion that made the most sense to us: by reporting on it and refuting it. In 2020 and 2021, we published many news articles addressing the most common and most outrageous myths about the disease and the vaccines. We even hosted several live question-and-answer sessions on Facebook with medical experts, allowing our viewers to interface and learn directly from these valuable resources.

Finally, there have been rare instances in which the misinformation spread on our platform, and pointed out by our viewers, was so egregious and potentially harmful to individuals that we removed it entirely. We treated these claims as we would unsubstantiated allegations against another person - just as we would not allow a user to publicly accuse someone of murder without proof, we took steps to remove falsehoods that could potentially endanger the community.

Each of these strategies has a downside, and no matter what we have done, we have been accused of not doing enough, doing too much, or doing the wrong thing to counter the tide of misinformation that was rising on our social platforms.

Bugeja said that our station, like other news outlets, is now serving only our dedicated readers and viewers, and only those viewers are open to the facts and factual analysis we provide.

“But for those who are trolling your reports, there's nothing you're going to do to change the troll’s viewpoint,” Bugeja said. “That viewpoint is part of his/her/their life, and their search for affirmation, and their vindictiveness, at times, against anybody whose viewpoint counters their perception of the world.”

What can be done?

The authors of the Indiana University study admit that there are critical questions in how social media platforms can handle the flow of dangerous misinformation. As Twitter and Facebook have increased their moderation of this misinformation, they have been accused of political bias.

“While there are many legal and ethical considerations around free speech and censorship, our work suggests that these questions cannot be avoided and are an important part of the debate around how we can improve our information ecosystem,” the study concludes.

Bugeja believes education must be what counters the transition from information to affirmation.

“There is only one antidote to this awful state of affairs, and that is to teach,” he said. “Media and technology literacy in the classroom and to start at an early age, and particularly by the time we get to college.”

Bugeja cited a study that found that 57% of a student’s waking hours are spent looking at a cellphone.

“And most of those are not looking at a web site, and most of those are getting their news from social media,” he said. “So the long-term outlook now is that we have to start requiring technology and media literacy.”

Technology and media literacy in the classroom is something Bugeja has been advocating for nearly two decades, albeit with limited success.

“We're living in a digital age; we're living by our cell phones and by our screens,” he said. “So we need to know how to use them…The only long-term solution right now is through education.”

RELATED: Study shows 3 out of 4 Americans believe or unsure about at least one false COVID-19 statement