Vendia sponsored this post.

While conventional data warehouses and data lakes have become common practice for analytics workloads, they don’t solve the broader enterprise problems of sharing real-time operational data among departments or across companies. This three-part series explores the challenges and solutions that arise when integrating business data across different applications, clouds and organizations in a modern IT stack.

Canyon Spanning — The Foundational IT Challenge

Tim WagnerTim is the inventor of AWS Lambda and a former general manager of AWS Lambda and Amazon API Gateway services. He has also served as vice president of engineering at Coinbase, where he managed design, security and product management teams. Tim co-founded Vendia to help organizations of all sizes share data more effectively across clouds and companies, and he serves as its CEO.One of the most enduring and foundational challenges for IT professionals regardless of their organization’s size or industry is getting data where it belongs. Computing and other types of workload processing, critical as they are, can’t even be contemplated if the data that drives them isn’t readily available. And while this problem has existed in some fashion since the emergence of commercial digital computing in the 1950s, structural trends — including SaaS-style applications, the emergence of public clouds and the commensurate need for multicloud strategies, and the increasingly complex, globe-spanning nature of business partnerships — have massively increased the scale and complexity of these data-sharing and “canyon-spanning” problems.

These “canyons” can take many forms in a modern IT stack:

Sponsor NoteVendia is a real-time data cloud company. Their flagship product, Vendia Share,helps organizations rapidly build transactional applications that need to access critical data stuck in other applications, data stores, and silos that span departments, clouds, and even partners.Read the latest from VendiaData sharing, initially in the form of data warehouses and more recently through data lakes, is a well-known pattern to IT architects when applied to analytics data that drives business intelligence (BI), AI/ML (artificial intelligence/machine learning) model training and similar activities. Vendors such as Snowflake incorporate multicloud data sharing in their solution, enabling IT professionals to more easily compose and share their analytics workloads.

However, data lakes represent only a fraction of the data under IT’s purview; in fact, the majority of the data stored in, transferred through and computed on by IT systems is actually operational data. Operational data differs from analytics data in several ways:

Interpreting the Varied Nomenclature around Real-Time Data Sharing

While operational data, and the need to share it, is ubiquitous, the fragmented nature of previous approaches means that there isn’t a clear, distinct set of terminology for either the problem or its solution. The data itself may be referred to variously as “real-time,” “operational,” “transactional,” “OLTP” (online transaction processing) or “application.” Aggregation solutions may be described as real-time data lakes, real-time data warehouses, real-time data-sharing solutions or real-time data meshes.

Older-generation approaches are often identified as EAI (enterprise application integration) and occasionally as EiPaaS (enterprise integration platform-as-a-service) or are based on their protocols (EDI — electronic data interchange, or emerging industry-specific protocols such as FHRE).

Adjacent strategies include “multicloud” (or “cross-cloud” or “polycloud”) architectures and ETL/EL solutions, which may be described as SaaS or “no code”). “Reverse OLAP” is a term sometimes used to describe using the results of calculations performed in a data lake to create a feedback loop that informs or updates an operational system (loosely speaking, an inverse of the more typical operational-to-analytics ETL flow).

Legacy Solutions and Their Limitations

Given the long history of operational data sharing in companies, it’s not surprising that a variety of approaches have been developed over the years. Most of these legacy approaches are artifacts of the period in which they were initially conceived. Below, we explore each of the traditional vendor categories and examine their shortcomings when they are applied to modern, usually cloud-based, workloads.

Figure 1: Point-to-point architectures created with EAI-based solutions

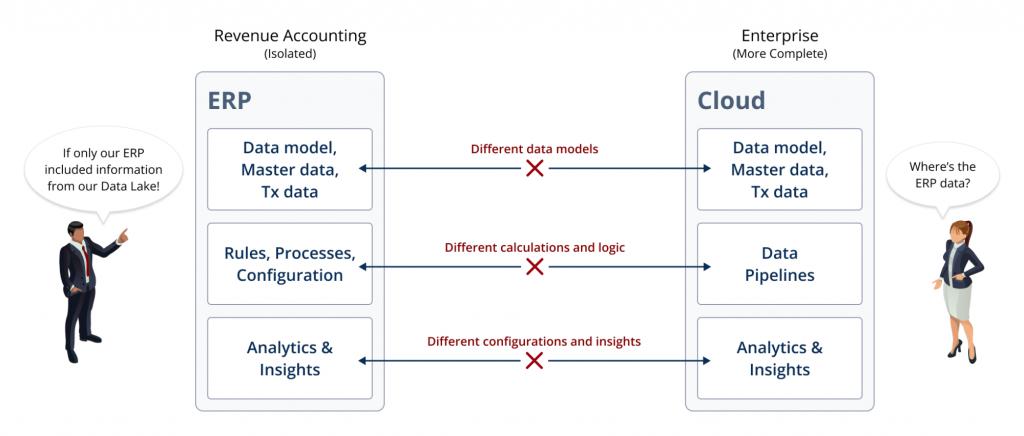

Figure 2: Data-sharing challenges across different tech stacks

Unfortunately, the first generation of blockchains wasn’t operationally ready for enterprise use cases: Their high latency, low throughput, high costs, lack of scalability and fault tolerance, and complex infrastructure deployment and management overhead made them ill-suited to real-world use cases.

Figure 3: The decentralization of blockchains provides a source of truth while maintaining separate data stores.

All of the above approaches suffer from inherent limitations when used to tackle real-time data-sharing challenges; none of them are ideal solutions as a real-time data mesh. An ideal solution would offer the single source of truth achievable with a blockchain but with the low latency, high throughput and fine-grained data controls more typical of an EAI-based solution coupled with all the scalability and fault-tolerance benefits of a public cloud service.

Next up

In Part 2 of this series, we will explore how these elements can come together in a best-of-breed data mesh. We define the real-time data mesh and discuss the key tenets for incorporating them into modern IT stacks.

The New Stack is a wholly owned subsidiary of Insight Partners, an investor in the following companies mentioned in this article: Real.